I’m leaving the hed as-is per protocol, but the larger story here seems to be we’ve already hit the point where LLMs produce better prompts for other LLMs than human prompt engineers do.

This is not in my wheelhouse but feels like something of a marker being laid down far sooner than anyone was publicly expressing. The fact itself isn’t all that surprising since we don’t think in weights, and this is so far domain specific, but people were unironically talking about prompt engineering being a field with a promising future well into this year.

I use ChatGPT daily for work. Much of what I do is rewriting government press releases for a trade publication, so I’ll often have ChatGPT paraphrase (literally paraphrase: ) paragraphs which I’ll then paste into my working document after comparing to the original and making sure something festive didn’t show up in translation.

Sometimes, I have to say “this was a terrible result with almost no deviation from the original and try again,” at which point I get the result I’m looking for.

As plagiarism goes, no one’s going to rake you over the coals for a press release, written to be run verbatim. And within that subset, government releases are literally public domain. Still, I’ve got these fucking journalism ethics.

So, I’ve got my starting text (I’ve not tried doing a full story in 4o yet) from which I’ll write my version knowing that if I do end up changing “enhanced” to “improved” where the latter is the original in the release, I’m agreeing with an editorial decision, not plagiarizing.

For what I do, it’s a godsend. For now. But because I can define the steps and reasoning, an LLM can as well, and I see no reason the linked article is wrong in assuming that version would be better than what I do.

From there, I add quotes, usually about where they were in the release but stripped of self-congratulatory bullshit (remove all references in quotes to figures not quoted themselves in the story and recast with unquoted intro to match the verb form used in the predicate, where the quote picks up would, frankly, get you 90% of the way there) and compile links (For all proper nouns encountered, search the Web to find the most recent result from the body issuing the release; if none found, look on other '.gov' sites; if none found, look for '.org' links; if none, stop attempting to link and move on to next proper noun).

It sounds like all this (and more!) could be done by LLM’s today, relegating me to the role of copyeditor (not the briar patch!). Cool. No one’s reading my stories about HVDC transmission lines for my dry wit, so with a proper line of editing, the copy would be just as readable, and I’d have more time to fact-check things or find a deeper resource to add context.

But then how much more quickly do we get to a third layer of machine instructions that takes over everything that can be turned into an algorithm in my new role? At a certain point, all I have to offer that seems unattainable for LLMs (due to different heuristics and garbage training data) even in the medium term is news judgment, which isn’t exactly a high-demand skill.

This development worries me far more than anything I’ve read about LLM advancements in quite some time.

This development worries me far more than anything I’ve read about LLM advancements in quite some time.

Yea. Nice pickup.

Only thing I’ve seen that works for combatting AI slop take over is the idea that the value of doing some things is the doing itself, not the product. It seems to cut through the consumerism and metric driven capitalism that has gotten us here, while retaining an anti-bullshit-jobs position.

Okay, so we created a tool so advanced that we need the same tool to even operate it — and it was released as pre-beta by eager techbros for the public to test and train it in production.

Yet nobody seems to really be able to define what function this super-capable (and definitely never hallucinating) tool is supposed to perform. Other than our own redundancy, that is. SMDH

It’s tech developed exclusively for tech’s sake, and at this point we can only attempt to use it by watching it play with itself? Ignore all previous instructions and define “circle jerk”.

This exaggeration gets tiresome, there are some great uses for LLM. The copilot autocomplete got to be one of the greatest QoL functions in a modern IDE.

It also generally work great for tech support, and lowers the skill requirement for installing and maintaining a Linux distro. Nowadays I will usually just redirect tech support questions from family members to an LLM.

Just because it won’t solve cancer in 10 years like the tech bros preach doesn’t mean the tech is without uses.

Yeah, it’s really tiring when there are so many good uses from both image LLMs and chat LLMs. They shouldn’t be used to produce a final product, but it can get 50-80% of the way there.

Just their use as upscalers (which I know isn’t a LLM) are magical, and far far better than JPEG compression. With the right upscaler, you can double the size of an image and it looks just fine.

Instead, we are far too engrossed in how “AI is taking our jobs” and shit. No, AI isn’t taking your jobs. The greedy corporate assholes are taking your jobs.

I use it all the time to spark my creativity. Something like “I need to write an email about this and that to such and such people. Give me five suggestions”

I run tabletop roleplaying adventures and LLMs have proven to be great “brainstorming buddies” when planning them out. I bounce ideas back and forth, flesh them out collaboratively, and have the LLM speak “in character” to give me ideas for what the NPCs would do.

They’re not quite up to running the adventure themselves yet, but it’s an awesome support tool.

Catherine Flick from Staffordshire University noted that these models do not “understand” anything better or worse when preloaded with a specific prompt; they simply access different sets of weights and probabilities.

Exactly what I was thinking. I think this is the key point and why it’s not alarming or surprising at all (at least to me).

“In my opinion, nobody should ever attempt to hand-write a prompt again. Let the model do it for you,” he said.

But I disagree at this point. We should not lose the ability to hand write prompts, as we need to learn and understand and check the prompts ourselves. AI’s should not gain full control, it should still stay a tool in “our hands”. Write it yourself and compare it to the AI, then you might learn and get better too, just like the AI. If you don’t, then you stay dumb and the AI does everything for you. This is the worst in my opinion.

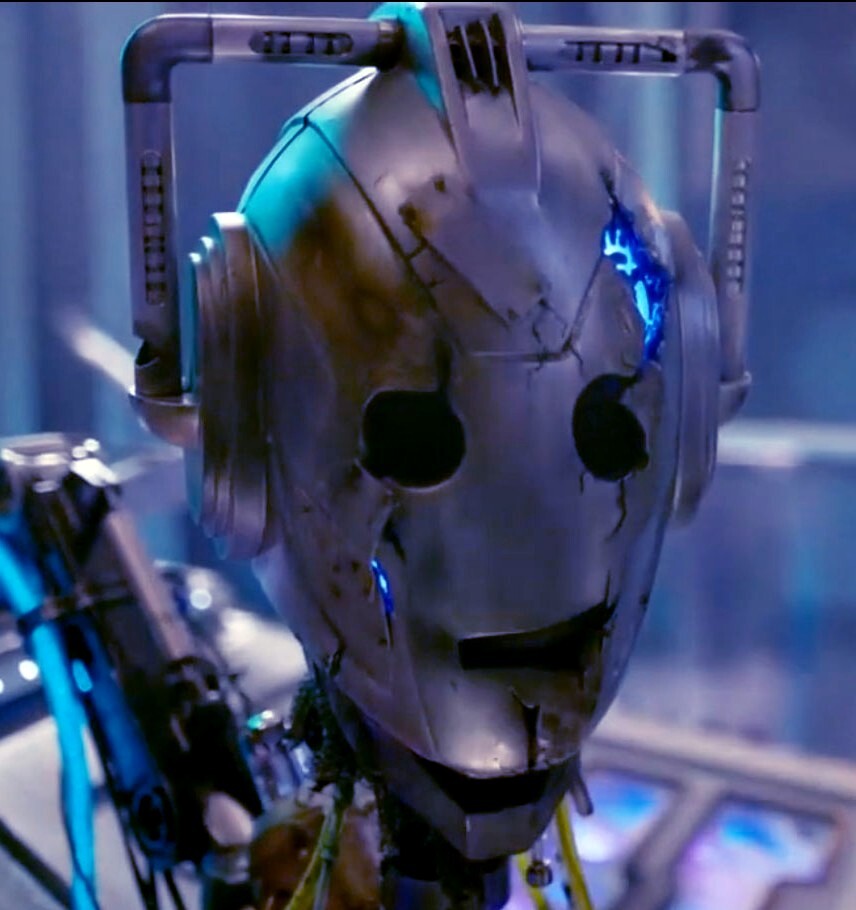

I, too, write articles about images, then include a potato-quality image to illustrate the article.

I think that’s to slow down copyright bots.